Hey guys, in this article we will discuss GANs (Generative Adversarial Networks).

Generative Adversarial Networks (GANs) were introduced in 2014 by Ian J. Goodfellow and co-authors.

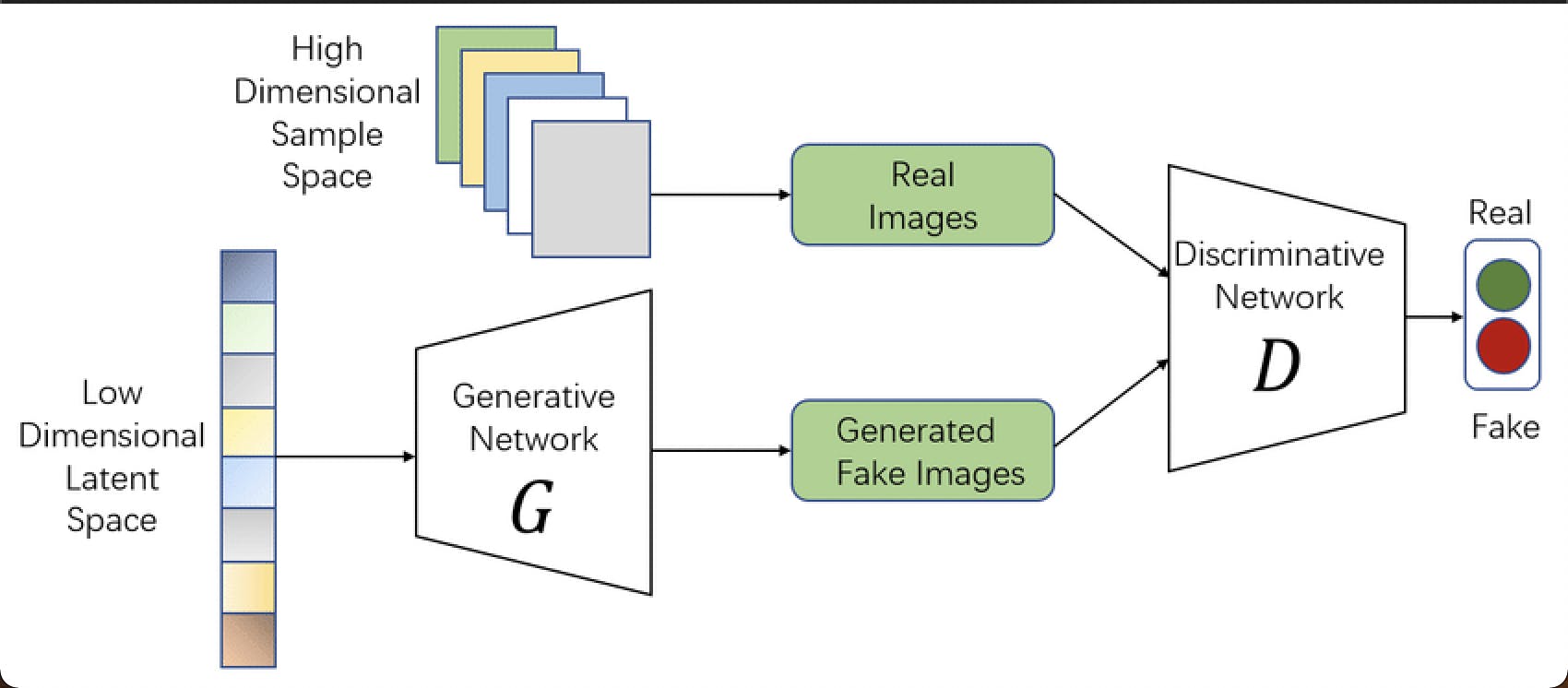

GANs perform unsupervised learning tasks in machine learning. It mainly consists of 2 models that automatically discover and learn the patterns in input data.

The two models are known as Generator and Discriminator.

They compete with each other to scrutinize, capture, and replicate the variations within a dataset. GANs can be used to generate new examples that could have been drawn from the original dataset.

Let's see first why and where we use GANs

- Super-Resolution Photo-Realistic Single Image.

- Deep Generative Image Models using a Laplacian Pyramid of Adversarial Networks.

- Generative Adversarial Text to Image Synthesis.

- image generation, video generation, and voice generation. and more...

If there is a database with 'n' real images, the generator neural networks create 'n' fake images and the discriminator will help identify the real and fake images. The two models are trained together in a zero-sum game, adversarial, until the discriminator model is fooled about half the time, meaning the generator model is generating plausible examples.

GENERATOR:

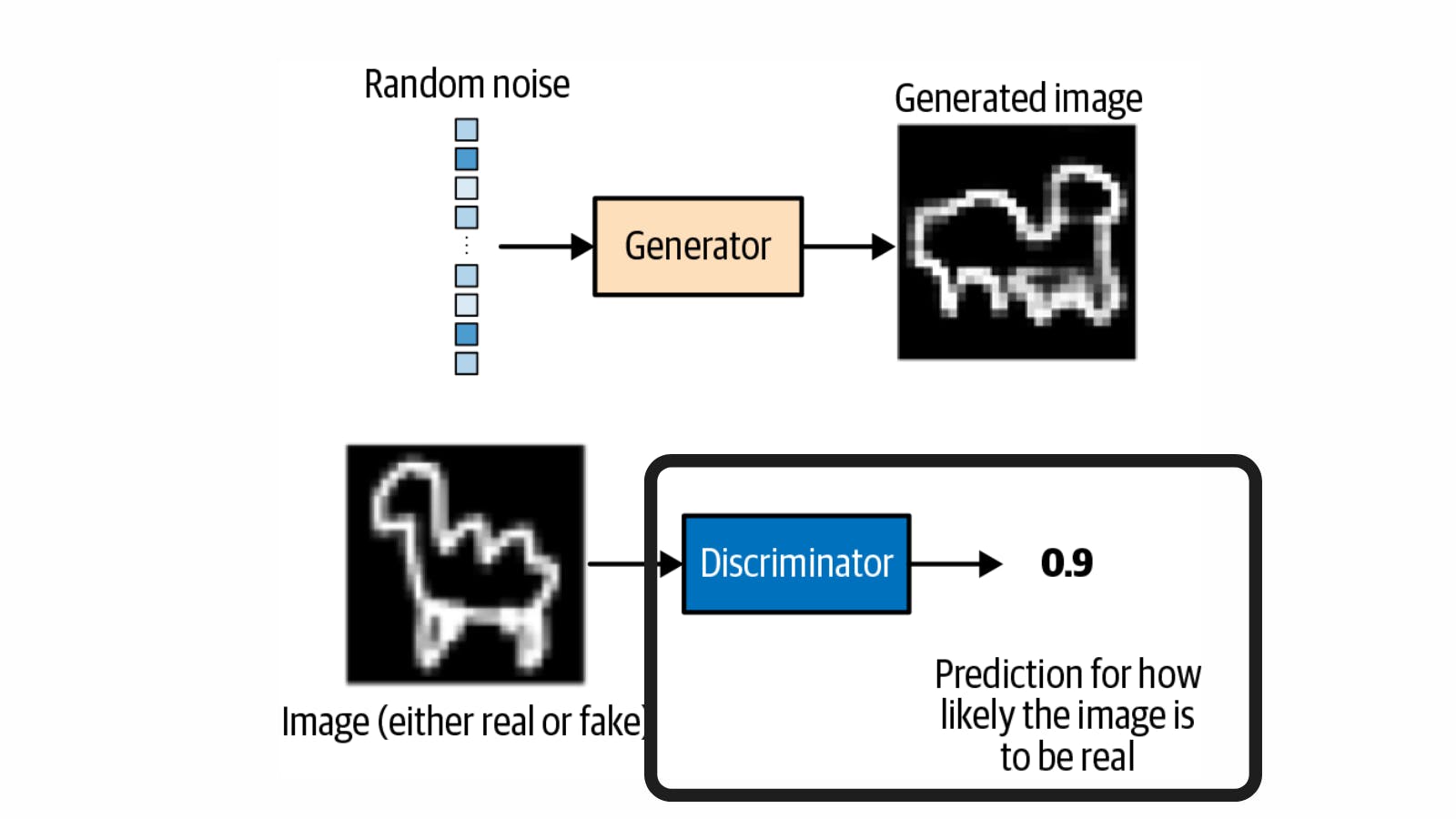

A Generator in GANs is a neural network that creates fake data to be trained on the discriminator. It learns to generate plausible data. The generated examples/instances become negative training examples for the discriminator. It takes a fixed-length random vector carrying noise as input and generates a sample.

Discriminator :

The discriminator is a Convolutional Neural Network consisting of many hidden layers and one output layer, the major difference here is the output layer of GANs can have only two outputs, unlike CNNs, which can have outputs with respect to the number of labels it is trained on.

The output of the discriminator can either be 1 or 0 because of a specifically chosen activation function for this task, if the output is 1 then the provided data is real and if the output is 0 then it refers to it as fake data.

The discriminator is trained on the real data so it learns to recognize what actual data looks like and what features should the data have to be classified as real.

We can imagine, that the discriminator is like a trained guy who can tell what’s real and what’s fake and Generator is trying to fool the Discriminator and make him believe that the generated data is real, with each unsuccessful attempt Generator learns and improves itself to produce data more real like. It can also be stated as a competition between Generator and Discriminator.

Training Generator and Discriminator :

Discriminator training:

Initially, some random noise signal is sent to a generator which creates some random pattern of useless images containing noise.

Now, the discriminator takes in two inputs - one is the image generated by the discriminator and another one is the sample real-world images.

The Discriminator populates some probability values after comparing both the input images. An error is calculated by comparing probabilities of generated images with 0 and comparing probabilities of real-word images with 1.

After calculating individual errors, it will calculate cumulative error(loss) which is backpropagated, and the weights of the Discriminator are adjusted.

This is how a Discriminator is trained.

Generator training:

we also need to backpropagate an error to the Generator so that it can adjust its weights as well and train itself to generate improved images as we backpropagate for the discriminator.

Now the generator takes in its own output as input instead of the random noise. The newly generated images from the generator will now be an input to the Discriminator which again calculates probabilities.

Now, an error will be calculated by comparing the probabilities of generated images with 1.

It will calculate every individual error, after every individual error - it calculates the cumulative error, which will be backpropagated, and the weights of Generators are adjusted.

This is how Generator is trained.

Outro:

I hope this article is useful and gave some basic understanding of Generative Adversarial Networks (GANs). GANs are widely applied in:

- Generating Images

- Super Resolution

- Image Modification

- Photo realistic images

- Face Ageing

& etc...

I would encourage you to dig deeper and learn the inner workings of the networks on how the weights are adjusted and backpropagation works. I would be more interesting to learn.

HAPPY LEARNING

-JHA